Death of the Default Cube

Some ramblings on generative AI for 3D asset creation

In my last essay, I explored artificial intelligence’s potential to transform the video game industry. This essay delves deeper into one specific aspect: the use of generative AI for asset creation, in particular the 3D model.

There is a lot of discourse on what generative AI will be able to do in the near future, one of the often mentioned tasks (in gaming) is 3D asset generation. I spent the first half of my twenties learning and using 3D pipelines and software, it takes many hours of learning to master the multiple software required to produce 3D assets as well as artistic abilities in sculpting, texturing and general aesthetic understandings, so I am particularly interested and sceptical at current attempts to do so.

What is a 3D model?

A 3D model is a digital representation of an object in three dimensions, often created using software like Blender, Maya or Zbrush. These models are composed of polygons defined by vertices, edges and faces to form a mesh. This mesh is then textured, coloured, and (if needed) rigged/skinned for animation, making 3D models versatile tools in various digital applications. Today's immersive open world games like Skyrim and Zelda BOTW or Hollywood's cinematic spectacles like Avatar are all built on the foundation of 3D modelling advancements. Since its inception in the 1960s, 3D modelling software has completely changed the media we consume not only enhancing the visual experience but expanding possibilities for storytelling and interactive media.

Despite technological advancements, 3D modelling remains a complex and skill-intensive process, particularly in video game development.Creating game-ready 3D assets involves a meticulous process to balance visual quality with performance optimization. This process involves creating ‘clean’ topology, UV unwrapping, texturing, and rigging, all of which must align with the technical specifications of the intended game engine. This optimisation is crucial to maintain smooth gameplay and visual coherence within the game world.

The standard process for creating a 3D game-ready asset involves multiple essential stages:

Modelling (varies depending on whether it’s organic or manmade)

Low-poly block out with shapes (Hard body modelling)

Sculpting (Soft body modelling)

Retopology and UV Unwrapping

Texturing/Shading

Rigging / weight painting

Optimisation (for game engines like Unreal or Unity)

This video showing the creation of a low-poly sword is a good example of the process an artist goes through to create a game-ready asset:

Consider this example: a "Stylized Pirate Tavern" asset pack from the Unreal Marketplace. It's a modular pack, meaning it includes 181 unique, interlocking pieces designed to create a complete scene. These meshes are simple and low-poly, and if we assume it takes about an hour to create each one, the total effort would be 181 hours, or roughly 7.5 days. This timeframe might be manageable for a game set in a single environment. However, most AAA games feature large, varied worlds with tens of thousands of assets, which significantly increases the production time.

Other more complex objects, like characters that need to be rigged in order to be animated inside a game, can take weeks to produce. Rigging in 3D modelling requires a specific topology (how the vertices and edges are arranged) to ensure that the mesh deforms naturally and realistically when animated.

Generating 3D content with AI

Software ate the world but now AI is eating software. Recent breakthroughs in generative AI are altering our approach to creative processes. So is 3D modelling next?

I think current 2D generation technologies are impressive. When I was working as a visual artist, time was also a big issue. For example, it took me around 60 hours* to just render this music video. (*Using blender cycles: one minute per frame, 24 fps (3624 frames). At the time I had a 2080ti GPU.)

Below, the image on the left is a CGI image I rendered. It probably took me a few hours to set up the scene (not to mention the 4 or so years I had been using Blender), the image on the right is created with ChatGPT (DALL·E). It took around 30 seconds and only required the prompt: image of a hand and part of an arm covered in vibrant green moss, emerging from the ground.

This isn't to say AI will render (no pun intended) CGI obsolete. There's value in the intention and control inherent in manual creation. However, AI opens up exciting avenues for artistic expression by lowering technical barriers. It's not just about speed or ease; it's about making creative experimentation more accessible.

The problem of generating 3D assets

Generating 3D models for games is not as straightforward as creating images or text when using AI. This complexity stems from several factors:

Complex Decision-Making: AI must understand how different parts can be combined in various ways, which requires complex decision-making capabilities.

Style Consistency: Most assets are created as a ‘pack’ so they can be used to build a level. Maintaining a consistent style across modular parts is challenging for AI, as it needs to recognise and replicate the aesthetic nuances of each component.

Understanding Context: AI models may struggle to understand the context in which the asset will be used, impacting the relevance and functionality of the generated parts. For example, a door needs to fit into a door frame.

Optimisation: AI-generated models might not always be optimised for game engines, leading to performance issues.

Learning from Limited Data: Unlike text or image there isn't a huge amount of data to train models with. If the AI doesn't have enough examples of well-made modular assets, it cannot learn how to effectively create them.

Current approaches of generating a 3D asset are like growing a tree every time you want to build a table, and cutting it out as one shape, rather than producing it from a set of parts. A 3D artist wouldn’t start from scratch for every model, particularly when it comes to texturing a mesh so a challenge arises in teaching AI to replicate this efficiency and creative intuition.

The current state of Text-to-3D

There are already multiple funded companies working on 3D asset generation. They use use conditional generative models like OpenAI’s Shap·E which represents a significant advancement in the field. Shap·E stands out because it doesn't just produce one type of output. Instead, it generates parameters for implicit functions that can create 3D models in two different ways: as textured meshes, which are commonly used in video games and animations, and as neural radiance fields (NeRF), a more advanced form that captures how light interacts with surfaces for realistic rendering.

The training of Shap·E involves two key stages. Firstly, an encoder is trained to consistently translate 3D assets into a set of parameters for these implicit functions. This means it takes a 3D model and turns it into a format that Shap·E can work with. Secondly, a conditional diffusion model is trained on these outputs. This model refines and enhances the converted data.

When this system is trained on a large dataset of both 3D models and related text descriptions, it becomes capable of generating a wide range of complex and varied 3D assets very quickly.

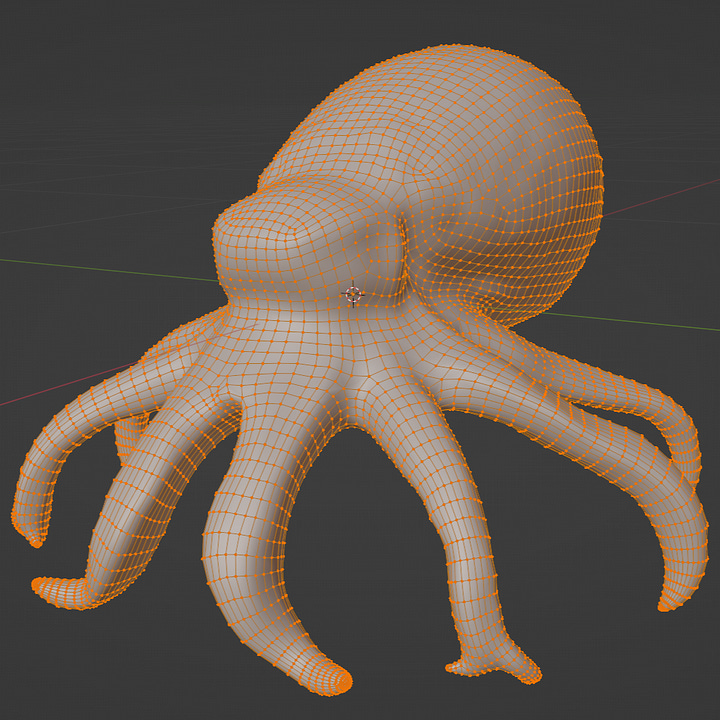

The most promising text-to-3D product I’ve seen is Luma. Here’s an example from their discord where a user prompted:

"a octopus squid alpha,Bioluminescent patterns adorn its body, pulsating in intricate designs that serve both as communication signals and a means of mesmerizing prey,offering a diverse mix of lizard, snake, turtle, and crocodile faces. game ready, highquality, scary, intricate detailing.game asset,intricate, pbr materials, miniature model, production quality"

As a static mesh, the result was impressive: a well-followed prompt yielding a visually appealing mesh and texture. When looking deeper, their inbuilt re-mesher has provided the mesh at 8K polys which is OK but not optimal and could definitely be lower.

Could it be used in a game as is? Yes, as a static object with no functionality. Could it be used as a starting point for a 3D artist to speed up their workflow? Absolutely, although for any major changes the texture would need to be redone. Could you populate an entire game world with generated assets? At the expense of performance. Could you create your hero assets for a game? Definitely not.

As demonstrated in the sword video, to get the texture maps that provide most of the asset’s detail, you need to first create a high poly version and then a low poly efficient model for the engine. The lengthy pipeline for creating a game-ready model I described earlier is complex for a reason. This complexity is necessary to ensure that the final model meets the high standards required for video games, which include not just visual appeal but also technical efficiency and compatibility with game engines. They have to work in a game.

When it comes to assets that need to be rigged and animated, using these assets becomes particularly tricky. For effective rigging, especially for characters, the topology needs to mimic the flow and structure of muscles and joints in a real body. This allows for smooth bending and movement, without unnatural distortions or stretching of the mesh. Proper topology is crucial for achieving lifelike animations, particularly in areas with complex movements like the face or hands but also applies to the fact that something like a car door needs to be separate from its body in order to be able to open and close.

Looking to the future

The advancement in AI-generated 3D models has been impressive over recent years. However, as highlighted, the current outputs still demand considerable manipulation to be transformed into optimised, game-ready assets. There's a need for adjustments in the generative process to yield assets that are more aligned with rigid gaming requirements.

A significant challenge lies in the oversimplification of the asset creation process. By amalgamating all stages into a single step, key elements crucial for utility in game engines and flexibility in use are often missed. This lack of nuanced control also poses a problem, especially in maintaining the integrity of a game's intellectual property and lore. To address these issues, the introduction of more detailed parameters and computer graphics techniques is essential, particularly for managing CG related weights and biases in models. Just as video generation platforms allow users to manipulate camera movement and film styles, 3D generators need to allow users to have more control over important features such as polycount, stylisation and texture settings.

While current text-to-3D technology offers exciting prospects and can enhance the efficiency of the 3D asset creation process, it is not yet a complete solution for the complex demands of game development. The need for skilled 3D artists remains critical to achieving the high-quality, functional, and interactive assets essential in modern video games. Short term, it could be utilised speeding up certain stages, like mesh or texture generation to increase productivity.

Long term, the development of a holistic asset creation tool appears achievable, considering the progress in text-to-3D technology and with the refinement of tools with more 3D focused tuning. Nonetheless, for such tools to be truly effective, they must be designed with an understanding of game engine limitations and requirements, necessitating a deeper knowledge of game-ready pipelines; I have yet to see an generative AI company hire a 3D artist.

Conclusion: Death of the default cube?

Creating 3D assets is a complex, costly and time-intensive aspect of video game development, which is why many studios opt to use premade assets from marketplaces or outsource the creation to external studios. The introduction of generative solutions could provide a more flexible and cheap solution to IP creation, although we are probably a year or two away from results that are truly useful for studios. As with many other technological advancements in CGI, like ZRemesher, AI-powered tools will aid artists in the arduous stages (like retopology, UV unwrapping and rigging) involved in creating 3D assets, hopefully allowing them more time to focus on the creative parts.

For studios, the cost and complexity of production often restrict creative experimentation, potentially stifling innovation or risk-taking in the final product. However, relying heavily on generated content also limits the diversity and uniqueness of assets as AI is great at replicating what was already done, but not so good at innovative design.

The art in video games is often one of the most memorable aspects, from classic characters like Pac-Man's ghosts to Mortal Kombat's Raiden. 3D assets and characters are more than just digital objects; they are integral to the game's identity, storytelling and play. Replacing, rather than enhancing, the work of 3D artists, who infuse these assets with depth, character, and individuality, with an automated system would be a significant loss.

The challenge for video games confronting generative AI is to find a balance between leveraging these new technologies to to enhance efficiency and reduce costs while preserving artistic expression and most importantly intention. This balance is crucial for continuing to push the boundaries of what is possible in gaming, both technically and creatively.